Sicilian Translator

| Sicilianu | English |

Tradutturi Sicilianu

- Introduction

- Translation Quality

- Translation Domain

- How to Use the Translator

- Standard Sicilian

- Frequently Asked Questions

made possible by

with resources from

more information

After years of development, our Sicilian translator now translates very well. For example:

- It's better to listen to the quiet words of the wise than the loud shouts of a ruler of fools. Ecc. 9:17 → Megghiu ascutari li paroli zitti dî saggi ca li gridi dû principi dî foddi. Ecc. 9: 17

- È meglio la sapienza della forza, ma la sapienza del povero è disprezzata e le sue parole non sono ascoltate. Ecc. 9:16 → È megghiu la sapienza dâ forza, ma la sapienza dû poviru è disprizzata e li sò paroli nun sunnu ascutati. Ecc. 9: 16

Those are some of its successes. Nonetheless, you will still find a few things that it does not translate well. Please focus on our success.

From our experiments, we learned how to create a good translator for the Sicilian language. And we also had to assemble enough parallel text (i.e. pairs of translated sentences). As you saw, it's a very time-consuming task.

We developed the methods necessary to create a neural machine translator for the Sicilian language. That's why ours provides better translation quality than the ones developed by large companies.

During training, a neural machine translator "learns" through a process of trial and error. First, it predicts a translation. Then it compares its prediction to the correct translation and adjusts the model parameters in the direction that most reduces the errors.

In other words, it needs to make a lot of mistakes before it begins translating properly. And only now – after combining our small dataset of Sicilian-English sentences with a very large dataset of Italian-English sentences and after "back-translating" almost one million Sicilian sentences into English and Italian – do we have a dataset large enough for it to make enough mistakes that yield a good translator.

We assembled our dataset from issues of Arba Sicula and from Arthur Dieli's translations of Sicilian proverbs, poetry and Pitrè's Fables. They have been very helpful to us and we thank them for their support and encouragement.

Translation Quality

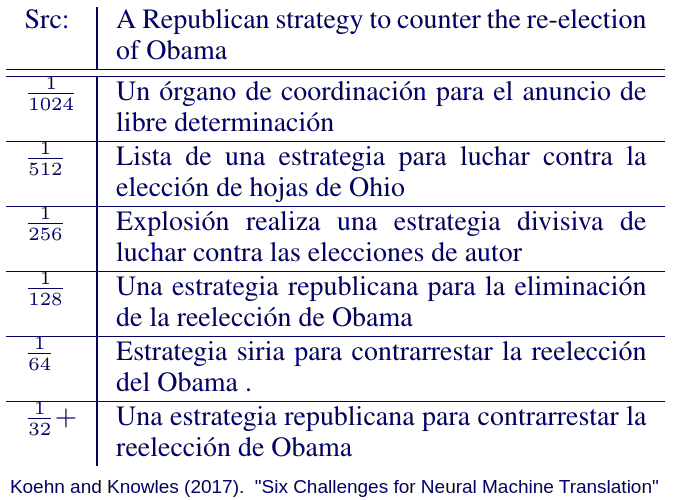

Once upon a time, unexpectedly bizarre translations frequently appeared. It was normal at that stage of development. The translator was producing gibberish because we had not yet assembled enough parallel text. For example, Koehn and Knowles (2017) used varying amounts of parallel text to train several English-to-Spanish models. Below is a table from their paper:

Translation Quality Improves with More Parallel Text

The fractions in the left column are the fraction of the 386 million words provided by the ACL 2013 workshop. At low amounts of parallel text, the model produces fluent sentences that are completely unrelated to the source sentence. But as the amount of parallel text increases, the translation becomes perfect.

And a paper by Sennrich and Zhang (2019) suggests that the method of subword splitting will enable us to create a good translator with a few hundred thousand words (i.e. far less than the millions that Koehn and Knowles needed two years earlier). Subword splitting usually helps the translator find good translations for words that did not appear in the training data or appeared rarely in the training data.

For example, the word jatta (cat) appeared six times in the training data, while its variant gatta only appeared once. Nonetheless, the translator still translates gatta correctly because the words are split into: j@@ atta and g@@ atta.

- Mi piaci jucari cu la jatta. → I like to play with the cat.

- Mi piaci jucari cu la gatta. → I like to play with the cat.

The disadvantage is that other rare words like cravatta (tie), which appears ten times in the dataset, is split into: crav@@ atta. In previous versions of this translator, that splitting caused the word to be incorrectly translated as cat.

And just as the translation input is a sequence of subword units, the translation output is also a sequence of subword units. Usually, merging the subword units will result in a fluent sentence, but occasionally the translator will "make up a word."

For example, one puzzled user asked what a fraggant is. It's my new word for any annoyingly incorrect combination of subword units.

Because we had less parallel training data, we had to use more subword splitting, so our model produced more fraggants. Reducing the vocabulary with subword splitting makes it possible to train a translator with only a few thousand lines of parallel text. But it also returns a lot of fraggants.

Now that we have collected enough parallel text, we need less subword splitting to train a good model, yielding better translation quality and fewer fraggants.

Translation Domain

I wish that this machine could translate my research into Sicilian. But this machine was not trained on economic literature. It was trained on Sicilian literature. So it's not going to translate my Robinson Crusoe model into Sicilian. At best, it might translate the Robinson Crusoe novel into Sicilian.

In general, the sentences that it will translate best are sentences similar to the ones it was trained on.

To cover the core language and grammar, our dataset includes exercises and examples from the textbooks Mparamu lu sicilianu (Cipolla, 2013) and Introduction to Sicilian Grammar (Bonner, 2001). To include dialogue and everyday speech, our dataset includes 34 of Arthur Dieli's translations of Giuseppe Pitrè's Folk Tales. And to cover Sicilian culture, literature and history, our dataset includes prose from 24 issues of Arba Sicula.

To augment our dataset, David Massaro contributed his collection of Bible translations and Marco Scalabrino contributed his translations of American songs.

Finally, to enable multilingual translation and to give our model more examples to learn from, we also included Italian-English text from Farkas' Books, from the Edinburgh Bible corpus, from ParaCrawl, and from Facebook's WikiMatrix and No Language Left Behind project in our dataset. All five are available from the OPUS project.

Sentences similar to the ones found in those sources are the sentences that this machine will translate best. For a good discussion of the domain challenges in machine translation, see the paper by Koehn and Knowles (2017).

To expand our translator's domain, we will need sentences from other domains. One possible source is Wikipedia. If we translated English Wikipedia articles into Sicilian, we could expand Sicilian Wikipedia and expand the domain of our translator. We would be happy to assist in such efforts.

And we'll continue to collect Sicilian language text because we want to develop a good translator for the domain of Sicilian culture, literature and history.

How to Use the Translator

Just type the sentence that you want to translate into the input box, select the appropriate direction (i.e. either "Sicilian-English" or "English-Sicilian") and press the "translate" button.

For best results when translating from Sicilian to English, use the standard Sicilian forms below. For example, use dici (not rici), use bedda (not bella), etc. And do not use apostrophes in the place of the elided i. For example, use mparamu (not 'mparamu), use nzignamunni (not 'nzignamunni), etc.

Standard Sicilian

The Sicilian language presented here does not represent any particular dialect. It presents the language that the neural network learned from translated sentence pairs. For lack of a better word, I call it: standard Sicilian.

Through selection and editing those Sicilian sentences roughly reflect the standards that Prof. Cipolla developed in Mparamu lu sicilianu. Developing a high-quality corpus of Sicilian text requires a standard, so I have tried to implement Prof. Cipolla's standards because he has established a high level of quality in his translations.

And given the nature of the translation task, I augmented his standards with the following differences:

- Italian-style H on aviri verbs: haiu, hai, havi, avemu, aviti, hannu

- strict use of L on articles and object pronouns: lu, la, li

- strict use of apostrophe and circumflex: cu' = cui, cû = cu lu

- strict use of apostrophe and circumflex: du' = dui, dû = di lu

- CI sufficiently denotes ÇI for words like: çiuri

- maintain the R when infinitive is followed by an object pronoun: Pozzu farlu.

The first four differences sharply distinguish important words. In theory, a neural network doesn't need such distinctions because it will learn a set of rules to distinguish the different contexts. In practice, the rule that the neural network often learns is to translate a word, so it's helpful to distinguish words.

The first four differences also allow us to write rules that convert the translator's output from a literary form to a spoken form: Vaiu a la scola → Vaiu â scola. Hai a parrari sicilianu → Hâ parrari sicilianu. Another set of rules allow the translator's input to handle both the literary and spoken forms: Vaiu â scola chî libbra = Vaiu a la scola cu li libbra. Hê parrari cû prufissuri = Haiu a parrari cu lu prufissuri.

The fifth difference, ÇI→CI, helps create an ASCII representation of the language. Because we have less data, it's helpful to reduce what we have to the minimum viable representation. Specifically, prior to translation, the machine first uncontracts (ex.: mappa dû munnu → mappa di lu munnu), then it strips any remaining diacritics (ex.: çiuri → ciuri, farmacìa → farmacia) and converts to lower case.

The final difference is a stylistic difference. In hindsight, I should have consulted Prof. Cipolla on this point. I didn't. So the Sicilian language presented here sometimes reflects this stylistic difference.

Frequently Asked Questions

Why didn't it translate this sentence properly?

The current translator was trained on a dataset with only 20,016 pairs of Sicilian-English sentences. Machine translation models are usually trained on several million pairs. We have tried to create a good translator with less, but it's not entirely possible.

I come from Suttasupra, province of Foraditesta. Can you create a translator for the dialect of my hometown?

When you have 20,016 pairs of Suttasuprisi-English sentences, we'll talk.

Can you help me translate a long document? Can I upload a document for translation?

Yes, of course! That's why we're developing a translator! We want to help everyone write more in Sicilian.

But you have to wait half an hour (30 minutes) because a virtual server hosts our model. It takes acceleration with a GPU, a physical component, to offer the option to upload a document and, two minutes later, download the translation. Please understand that the price of acceleration is too high for this small project.

Send an email to: eryk@napizia.com and he'll reply with a translation (or another solution).

How did you create this translator?

With neural machine translation, a form of artificial intelligence which "learns" how to translate by examining thousands of sentences that humans translated.

Please see my Sicilian NLP pages for a complete explanation. And please come back "behind the curtain" at the Darreri lu Sipariu page, where you can see how the translator works.

Copyright © 2018-2026 Eryk Wdowiak